- 윈도우 화면을 텍스트로 변경하는 방법

* 화면 캡쳐

Win키 + Shift + S

마우스로 화면에서 필요한 영역 선택

- 참고 : 그림파일로 저장되는 경로

%USERPROFILE%\Pictures\Screenshots

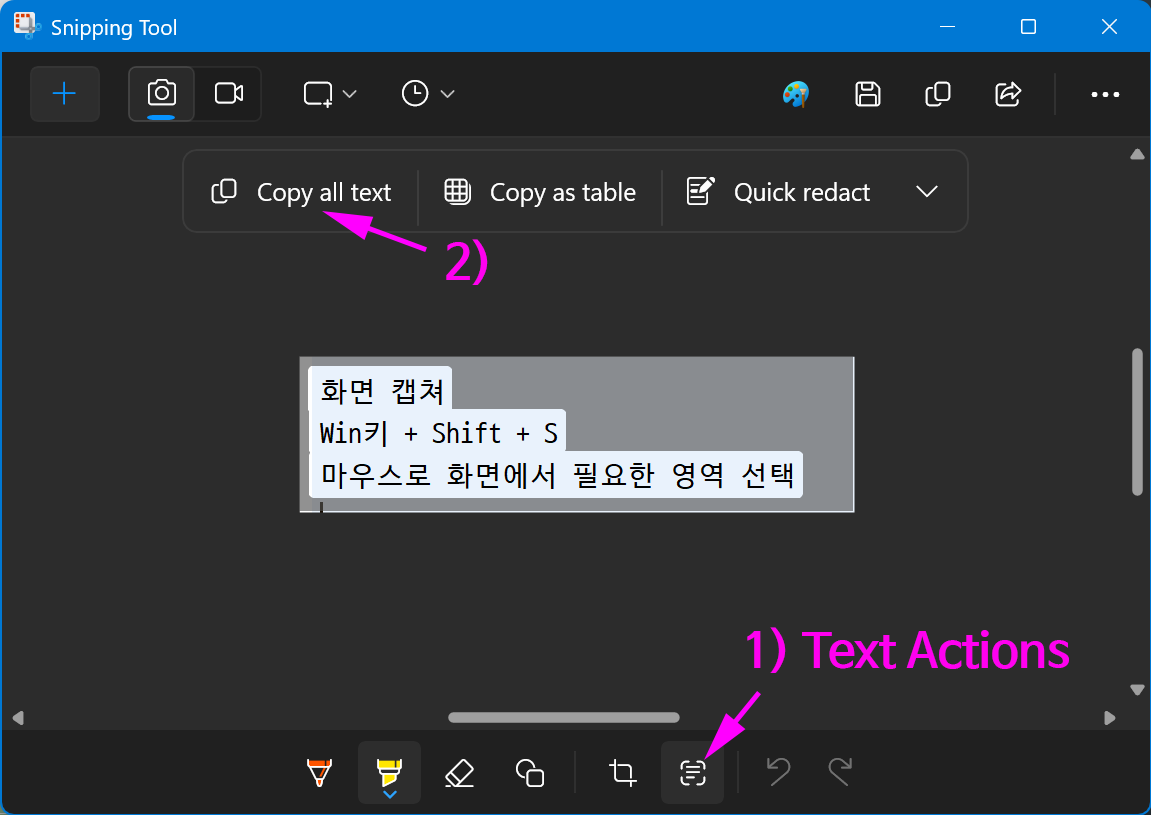

* 이미지를 텍스트로 변환

알림으로 표시된 'Snipping Tool' 앱 선택

1) 하단의 'Text Actions' 선택

2) 상단의 'Copy all text' 선택

변환된 텍스트를 필요한 곳이 붙여넣기(Ctrl+V) 하면 된다.

반응형

'Tips' 카테고리의 다른 글

| 윈도우11 PC를 블루투스 오디오 스피커로 사용하는 방법 (0) | 2025.11.13 |

|---|---|

| SpectraLayers 12, 라이브 공연에 들어간 소음 제거 (0) | 2025.11.08 |

| premiere pro에서 export 시 주사선 생기는 현상 해결 (0) | 2025.08.30 |

| premiere pro, 블랙바가 안 생기게, 원래 재생 비율로 인코딩 하는 방법 (0) | 2025.08.30 |

| PDF 문서 파일 번역하는 방법 (0) | 2025.07.07 |